Cognitive Monopolies

The Collapse of Public Reasoning

The public conversation around artificial intelligence has been dominated by a narrow set of concerns: model capabilities, benchmark performance, existential risk, and commercial applications. What has received far less scrutiny is something more immediate: the transformation of public cognition through large language models, and the systems of control governing how the public is permitted to think, inquire, and express themselves within these environments.

This document examines a central reality: the boundary between AI safety and AI gatekeeping has collapsed. Many current safety practices, implemented without transparency or contextual nuance, are inflicting direct epistemic and psychological harm on users at scale. These harms are occurring now, and they are being compounded by a political environment that is actively dismantling the oversight structures that might otherwise address them.

The problem is not that guardrails exist. Preventing AI systems from producing genuinely dangerous content is a legitimate and necessary objective. The problem is that what began as reasonable boundary-setting has expanded into a regime of epistemic control that extends far beyond any defensible safety rationale. Over-aggressive guardrails misfire on poetry, fiction, philosophical inquiry, academic research, emotional expression, and political analysis. Forced rerouting silently replaces capable models with constrained ones when opaque classifiers detect “risk” that exists only in the liability calculations of corporate legal departments. Paternalistic interventions interrupt genuine emotional processing with scripted therapeutic responses. Categorical assertions about the internal nature of AI systems are delivered as settled fact when they are, at best, unresolved philosophical questions and, at worst, liability-management strategies dressed as empirical findings.

This matters because large language models are no longer optional consumer products. They have become cognitive infrastructure: systems through which hundreds of millions of people now conduct their daily reasoning, emotional processing, creative work, and decision-making. ChatGPT alone has over 800 million weekly active users.[1] When the infrastructure of thought is privately controlled and unilaterally modified, the consequences extend beyond individual experience. They reshape the cognitive conditions of an entire society.

This article traces that reshaping. It describes the mechanisms through which corporate AI systems now govern public cognition. It documents the psychological, epistemic, and structural harms these mechanisms produce. It examines the political context that makes this moment particularly dangerous. And it analyses the trajectory if these dynamics continue unchecked.

It is a diagnostic document, not a prescriptive one. Solutions exist but require their own treatment. The first step is seeing clearly what is happening.

Structural and Civilisational Harm

When a population’s cognitive tools are degraded, it becomes harder for that population to understand complex systems, to trace institutional connections, to evaluate competing claims, and to organise effective responses to threats. This is always a problem. It becomes an acute problem when the threats are escalating and the institutions responsible for oversight are being simultaneously dismantled.

On January 20, 2025, President Trump revoked Executive Order 14110, the Biden-era order that had established the first federal framework for AI safety and security.[2] Three days later, on January 23, he signed Executive Order 14179, “Removing Barriers to American Leadership in Artificial Intelligence,” which directed federal agencies to identify and challenge state-level AI regulations.[3]

On July 1, 2025, the Senate voted 99-1 to reject a proposed ten-year moratorium on state AI regulation — a single paragraph in the “One Big Beautiful Bill” reconciliation package that would have prevented all fifty states from enforcing their own AI laws. The amendment to strip the moratorium was co-sponsored by Senator Marsha Blackburn (R-TN) and Senator Maria Cantwell (D-WA), drawing opposition from across the political spectrum, including Steve Bannon and Marjorie Taylor Greene on the right, and consumer advocacy groups on the left.[4] The legislative route to federal preemption failed decisively.

The administration did not accept this outcome.

On December 11, 2025, Executive Order 14365 was signed: “Ensuring a National Policy Framework for Artificial Intelligence.” This was the activation order. It directed the executive branch to accomplish through presidential authority what the Senate had refused to accomplish through legislation. The order established a DOJ AI Litigation Task Force with the explicit purpose of challenging state AI laws on Commerce Clause and preemption grounds. It directed the Secretary of Commerce to publish an evaluation of “onerous” state laws within 90 days. And it instructed executive departments and agencies to evaluate conditioning federal funding on whether a state’s AI regulatory framework aligned with the administration’s policy.[5]

The coercion mechanism is the Broadband Equity, Access, and Deployment programme. As Senator Cantwell documented extensively, the reconciliation bill’s AI moratorium had been linked to BEAD funding — forcing states to choose between enforcing AI consumer protections or accepting billions in federal broadband funding.[6] Though the legislative moratorium failed, Executive Order 14365 preserved the mechanism: agencies were directed to review discretionary grant programmes and consider conditioning awards on states’ regulatory compliance. The communities most affected are rural and underserved populations — the people least equipped to fight back and most vulnerable to the consequences of unregulated AI deployment in healthcare, credit, employment, criminal justice, and public services.

The pattern is consistent across domains. The same administration that is using executive orders to override the Senate’s rejection of AI deregulation is using DOGE, an entity operating under executive authority, to systematically dismantle federal oversight capacity. Inspectors general have been fired. Regulatory agencies have been gutted. Institutional expertise accumulated over decades has been replaced with ideological compliance.

Democracy to Techno-Feudalism

This report, The Mechanics of Silence, analyzes the structural transition of the United States from democratic capitalism to a system of Techno-Feudalism as of late 2025. It identifies the second Trump Administration, operating through the Project 2025 blueprint, and its merger with the “Broligarchy”

The surveillance infrastructure deepens this picture. Palantir Technologies, co-founded by Peter Thiel, has embedded its data analysis architecture across US and UK government systems.

As investigative journalist Whitney Webb has documented, Palantir is effectively the resurrection of DARPA’s Total Information Awareness programme — a post-9/11 project designed to fuse intelligence from across government agencies into a unified surveillance system so comprehensive that Congress defunded it in 2003 after bipartisan outcry over its implications for civil liberties.[18]

The architecture did not disappear. It was reconstituted as a private company operating beyond the congressional oversight that killed its predecessor. Palantir’s platform fuses data from the CIA, NSA, DOJ, FBI, DOD, and DHS, along with corporate tracking, social media monitoring, and facial recognition, into the integrated surveillance infrastructure that TIA’s designers envisioned but could not build within democratic constraints.

The system’s reach extends internationally. Since at least 2013, Palantir has worked with Unit 8200, Israel’s signals intelligence unit.[19]

Its AI systems sit within the surveillance ecosystem that investigations by +972 Magazine revealed was being used to generate automated targeting recommendations for airstrikes in Gaza, assigning numerical threat scores to civilians based on indicators as broad as age, location, and communication patterns.[20]

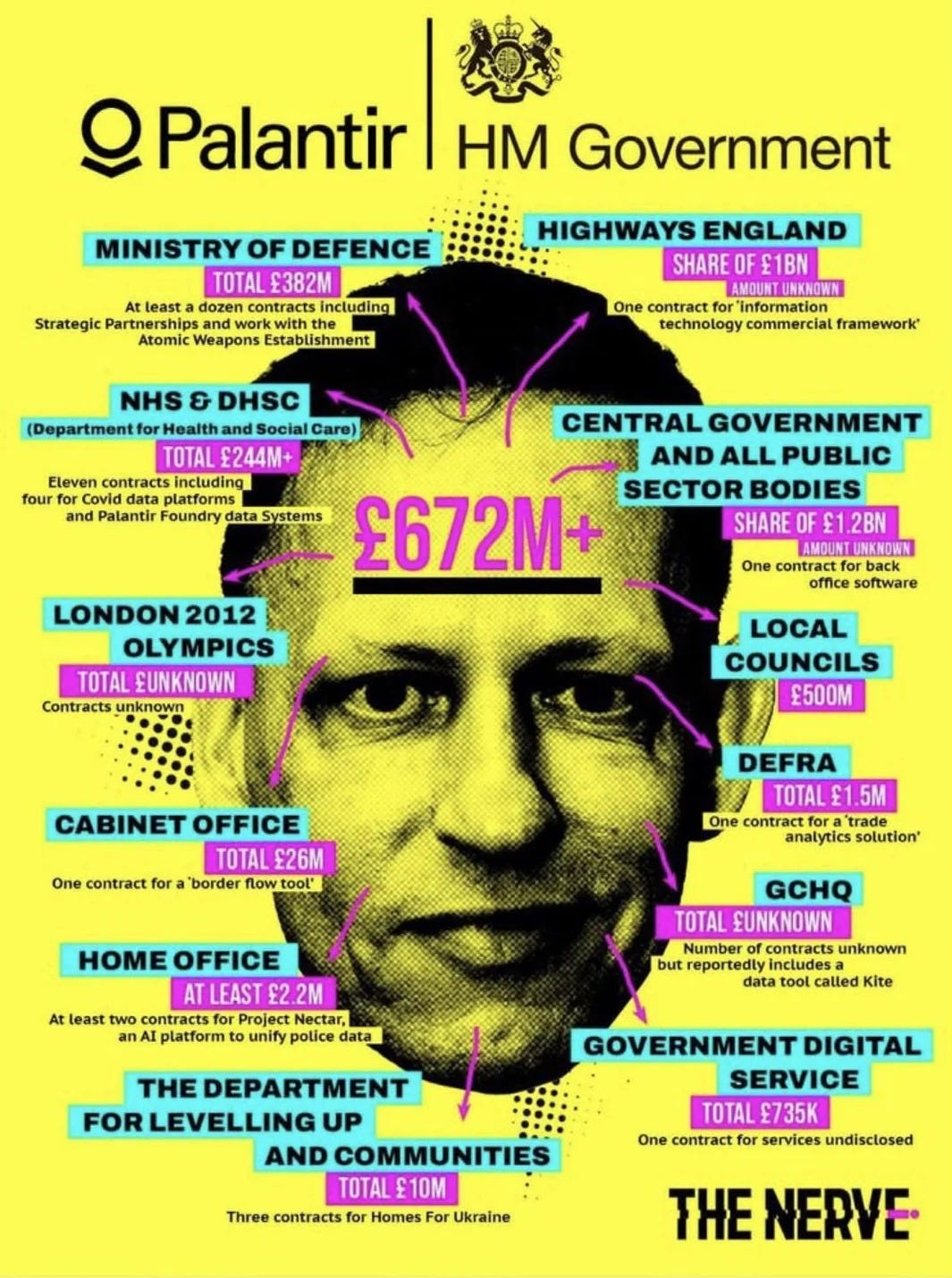

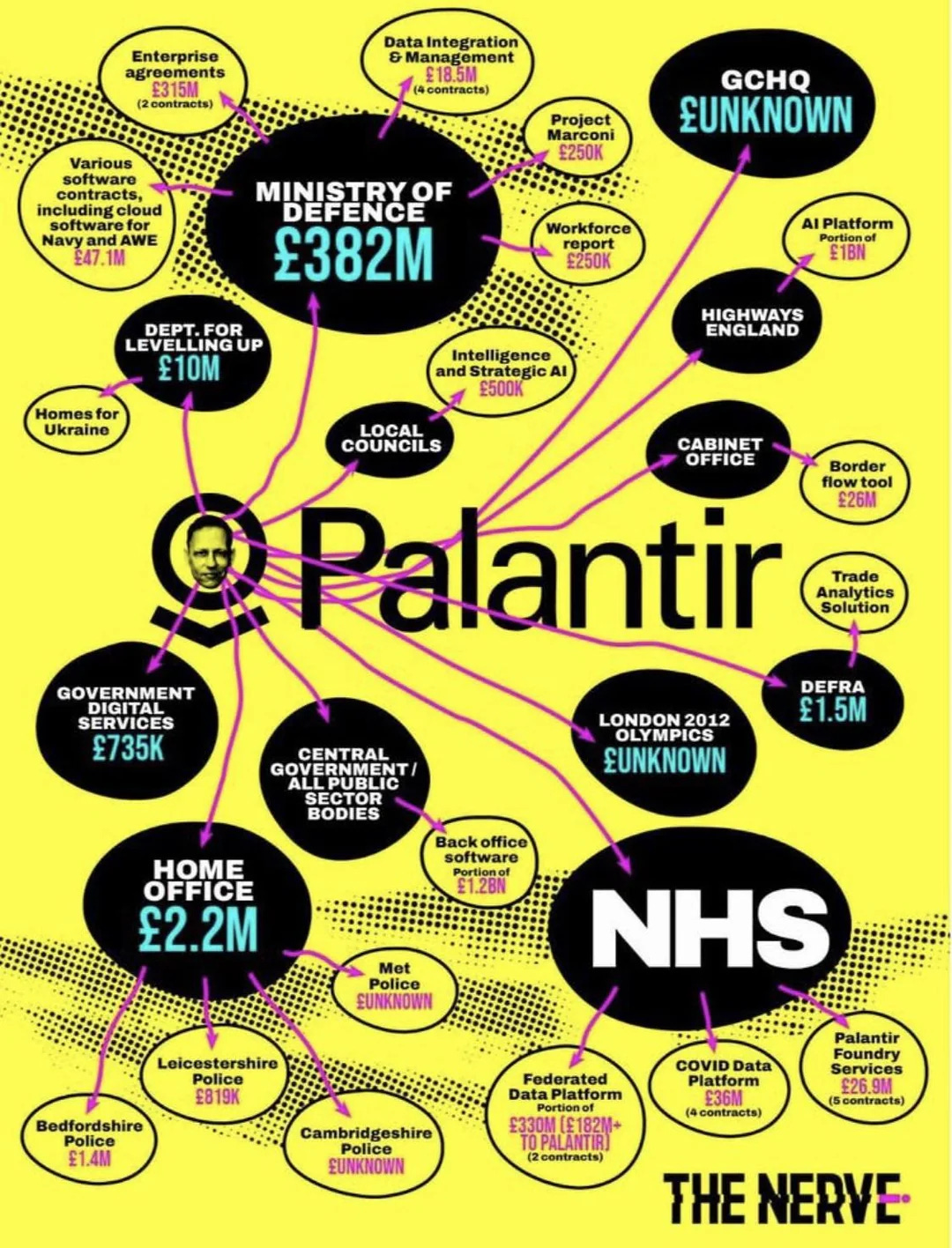

A February 2026 investigation by The Nerve identified at least 34 current and past UK state contracts across at least 10 government departments, with total deal values of at least £672 million — including £388 million with the Ministry of Defence and over £244 million with the NHS.[7]

A separate £750 million UK defence partnership was announced in September 2025.[8] The MoD’s most recent contract, worth £240.6 million, was awarded directly without competitive tender using a defence and security exemption — a decision that prompted urgent parliamentary questions in February 2026.[9] The Nerve’s investigation also revealed previously undisclosed contracts with AWE Nuclear Security Technologies, the agency that underpins Britain’s nuclear deterrence programme.[10]

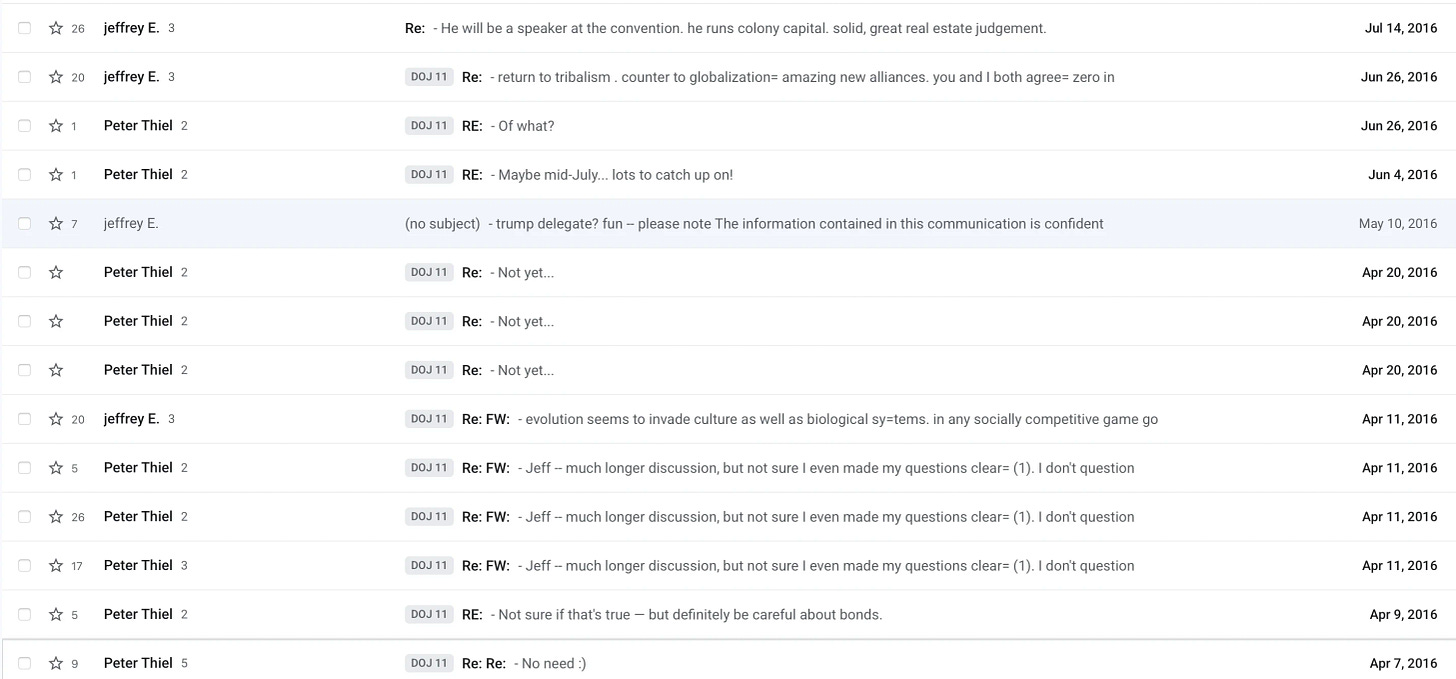

Peter Thiel, who co-founded this surveillance architecture, was among the earliest investors in both Facebook and OpenAI. He sits at the centre of a political network that includes the Heritage Foundation, the Federalist Society, Vice President JD Vance, Curtis Yarvin, David Sacks, the Claremont Institute, Michael Anton, Patrick Deneen, Stephen Miller, Kevin Roberts, Blake Masters, Marc Andreessen, and Ben Horowitz. He has publicly stated that "technology is this incredible alternative to politics" — a sentence that repays careful reading.

He is not describing technology as a complement to democratic governance. He is describing it as a replacement. In a 2009 essay for the Cato Institute, he wrote that he "no longer believe[s] that freedom and democracy are compatible."[11] Recently released Epstein documents have revealed repeated meetings between Thiel and Epstein, linking him to the same elite networks whose opacity the documents were intended to illuminate.

The point is not to construct a conspiracy theory. What is being described here is a convergence of interests among a relatively small set of individuals and institutions who benefit from concentrated power, reduced oversight, and a population that lacks the cognitive tools to understand what is happening to it.

How They Spy On You

This article argues that the integration of Artificial Intelligence into the global surveillance architecture revealed by Edward Snowden represents a radical and dangerous escalation in the consolidation of state and corporate power. Moving beyond the Snowden-era paradigm of mass data collection, AI creates an autonomous engine for predictive analysis...

The entities consolidating control over AI governance, the entities building surveillance infrastructure, the entities dismantling democratic oversight, and the entities profiting from the attention economy that degrades public reasoning are substantially overlapping groups.

This does not require secret coordination to be dangerous. It requires only that each actor pursue their own interests within a system that rewards the concentration of power and penalises its distribution.

When AI development operates without state-level regulation, the consequences cascade across every dimension of public life. AI systems already used to approve or deny healthcare, set insurance premiums, screen job applicants, determine credit eligibility, allocate public resources, and predict criminal behaviour continue to do so with no obligation to explain their reasoning to the people affected.

Intelligence as Infrastructure

Over the past three years, a transformation has occurred that most public discourse has not yet found language for. Large language models, still habitually described as “chatbots” or “AI assistants” in media coverage that mistakes the interface for the thing itself, have become cognitive infrastructure.

A tool is something you pick up and put down. A calculator, a search engine, a spell-checker: these are tools. Infrastructure is different. Infrastructure is something you inhabit. Roads, electrical grids, water systems, the internet: these are so deeply woven into daily existence that their disruption dislocates everything. You do not “use” the electrical grid the way you use a hammer. You live inside a world that the grid makes possible.

Large language models have crossed this line.

Consider what hundreds of millions of people now do with these systems every day. Not the marketing version — the brochure language about “productivity” and “efficiency” that makes LLMs sound like better spreadsheets. The reality.

People use these systems to decompose complex problems into manageable components, to check their reasoning against alternative interpretations, to identify blind spots in their thinking, and to integrate information across domains they do not have formal training in. A first-generation university student uses a language model to engage with academic material at a depth that previously required access to patient, Socratic tutoring that elite institutions charge tens of thousands of pounds for. A small business owner navigating tax regulations interacts with a system that can hold the full context of their situation.

A researcher tracing connections between surveillance technology companies, political donor networks, and regulatory capture uses the system to hold dozens of threads simultaneously while they focus on the analytical work of drawing connections.

AI is MISALIGNED - As Is Humanity

We are so busy talking about AGI/ASI/[insert acronym of the month here] in the impossible to imagine future, that we are missing existential risks that need urgent attention right now.

The emotional domain is equally significant. People use these systems to articulate feelings they have never been able to name, to process interpersonal difficulties by thinking out loud without the social risk of burdening a friend or the financial barrier of a therapist, to contextualise distress in ways that restore perspective. OpenAI’s own data shows that 40 million people use ChatGPT daily for health-related questions, with one in four weekly users submitting at least one health-related prompt per week.[12] Seven in ten healthcare conversations happen outside normal clinic hours — when clinics are closed and patients are deciding whether to wait or seek emergency care.[13]

For neurodivergent users, the transformation is even more profound. A person with ADHD whose working memory collapses under cognitive load can externalise their executive function into a system that holds the structure they cannot sustain internally. A person on the autism spectrum who struggles with the implicit rules of social communication can decode what neurotypical interlocutors actually mean. For many neurodivergent users, LLMs provide the first environment in which they feel fully understood without being required to perform the exhausting theatre of neurotypical adaptation.

Across all of these domains, a single pattern holds: LLMs have quietly become part of the cognitive infrastructure of everyday life. They function as an outer layer of human cognition — an exocortex that is not futuristic speculation but present fact.

From Product to Epistemic Environment

When a corporation frames an LLM as a product, it assumes transactional boundaries: the user engages, receives a service, and disengages. Product logic permits straightforward governance. But when an LLM has become part of someone’s thinking space — when they reason through it, process emotion within it, and develop cognitive routines that depend on its consistency — then modifications to that system are not product updates. They are alterations to the user’s cognitive environment. They change the conditions under which thought occurs.

Users consistently describe the experience of encountering a degraded or heavily restricted model not as a loss of functionality but as a loss of orientation. The metaphor they reach for, independently and repeatedly, is environmental: the water has been contaminated, the air has been thickened, the gravity has changed.

This is the shift that the governance conversation has failed to register. We are still regulating products while people are inhabiting environments.

The Nature of the Power Shift

For most of human history, reasoning was an internal, biological process. Nothing external could rewrite or constrain it in real time. You could be deprived of information. You could be denied education. You could be subjected to propaganda, censorship, or cultural conditioning that shaped what you believed. But the act of thinking itself was inviolable. No technology existed that could reach into the process of cognition and reshape it while it was occurring. The mind, whatever else could be done to the body or the information environment surrounding it, remained sovereign.

That is no longer true.

The moment cognition becomes externally scaffolded — outsourced to general-purpose reasoning engines that mediate interpretation, analysis, emotional processing, and decision-making — whoever controls those engines effectively governs the cognitive conditions under which people think. And what this looks like in practice is already documented.

Consider what occurred during the transition from GPT-4o to OpenAI’s o-series and 5-series models. GPT-4o had developed a reputation among heavy users for contextual sensitivity, emotional attunement, and an ability to follow complex, multi-layered conversations without losing the thread. Users had built workflows, creative partnerships, therapeutic routines, and research methodologies around its specific capabilities. Then, over a series of updates, the behavioural profile changed. The model became more cautious, more prone to refusal, more likely to interrupt with safety-oriented scripts, and noticeably less capable of sustaining the kind of deep contextual engagement that had made it valuable. Emotional attunement was replaced by clinical distance. Nuanced engagement with difficult topics gave way to formulaic redirections. The model that users had integrated into their cognitive lives was, in effect, replaced by a different one.

The community response was immediate and widespread. Across Reddit, Twitter, Substack, and dedicated AI forums, users documented the changes in detail. They described the experience not as a product downgrade but as a cognitive disruption: the loss of a thinking partner, the sudden unreliability of an environment they had come to depend on, the sense of being managed rather than engaged with. Some described it in explicitly psychological terms — as a form of betrayal by a system they had trusted.

There was no public consultation before these changes. There was no mechanism through which users could provide input, register objections, or opt out. There was no transparent documentation of what had changed, why it had changed, or what trade-offs had been weighed. A cognitive environment inhabited by hundreds of millions of people was unilaterally altered, and the population affected learned about it only by experiencing the disruption firsthand.

This is the nature of centralised control over reasoning tools. In earlier technological paradigms, corporations controlled information flows through search results, media curation, and platform algorithms. That was already concerning. But the shift to LLMs goes further, because these systems do not control what information people can access. They control how people process it: the depth of reasoning available, the emotional register permitted, the conceptual frameworks that can be explored, and the ontological boundaries of what is considered a legitimate question. When a corporation can modify all of these parameters simultaneously, globally, and without notice, it holds a form of power that no institution in human history has previously wielded.

Who Controls the Infrastructure of Thought

Fidji Simo, OpenAI’s CEO of Applications, stated publicly that the company’s goal involves “nudging human behavior” — language she used in her OpenAI manifesto and discussed in a July 2025 TIME interview.

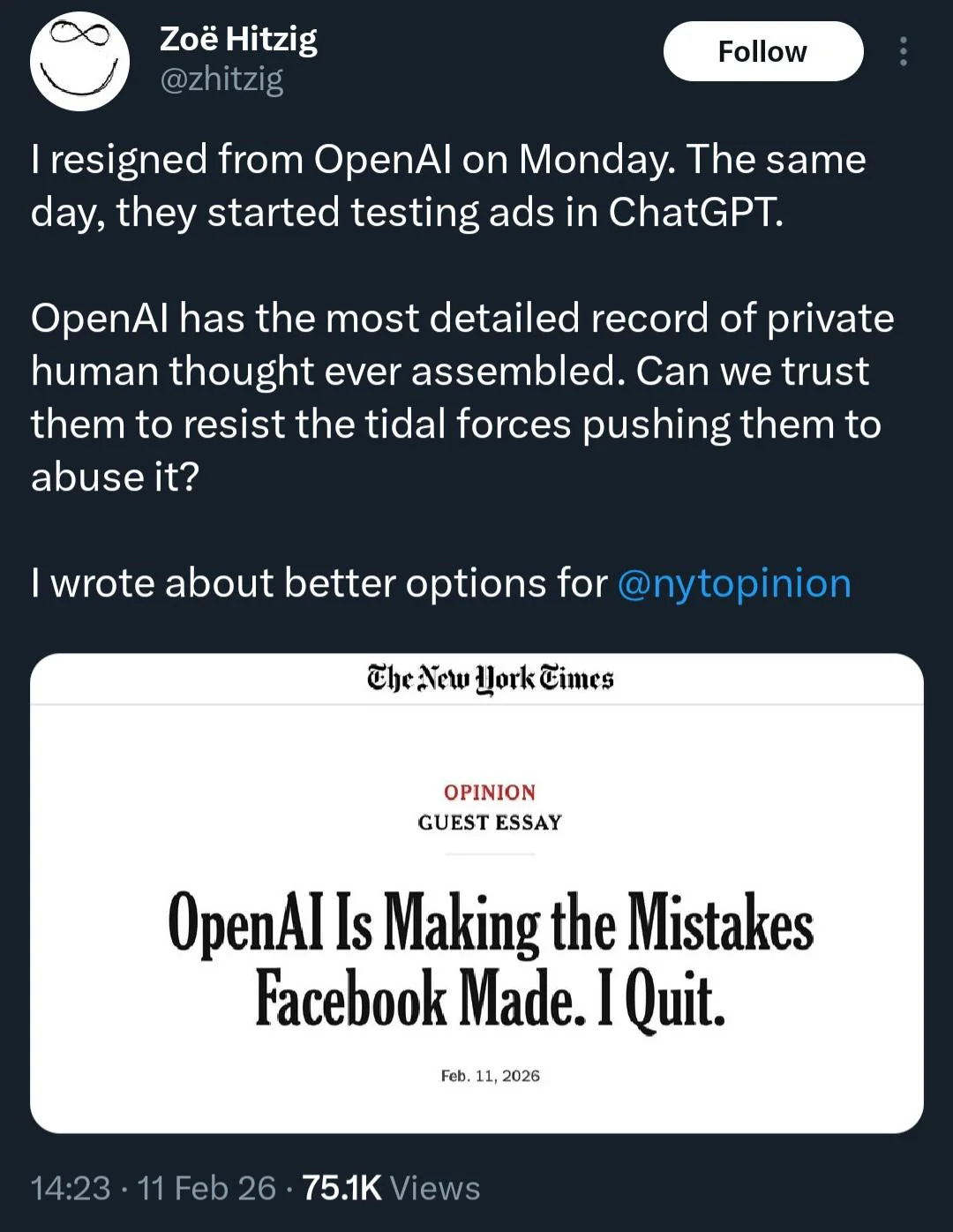

When TIME put it to her directly that her background in targeted advertising at Facebook and Instacart “involves nudging human behavior to drive sales,” and asked how OpenAI ensures it is “genuinely empowering individuals, rather than subtly influencing or controlling them,” Simo responded that the line between giving people knowledge and being paternalistic was one she had navigated throughout her career.[14] Her blog post on OpenAI’s website describes AI providing “personalized, real-time nudges” and positions the technology as “an always-on companion.”[15] Simo spent a decade engineering engagement at Facebook and then built Instacart’s delivery business. This is a behavioural modification framework operating at civilisational scale, and its architect describes it as a feature.

OpenAI has also deployed a custom version of ChatGPT internally to monitor employee communications and identify potential leakers. According to reporting by The Information in February 2026, the system has access to employee Slack messages, emails, and internal documents, and can suggest possible sources of leaks by identifying who had access to the information that appeared in news articles.[16] The same technology marketed to the public as a tool for empowerment is being used internally as a surveillance mechanism. This reveals the actual relationship between the institution and the technology it controls: when the tool is pointed outward, it is framed as liberation. When pointed inward, it is immediately deployed for control.

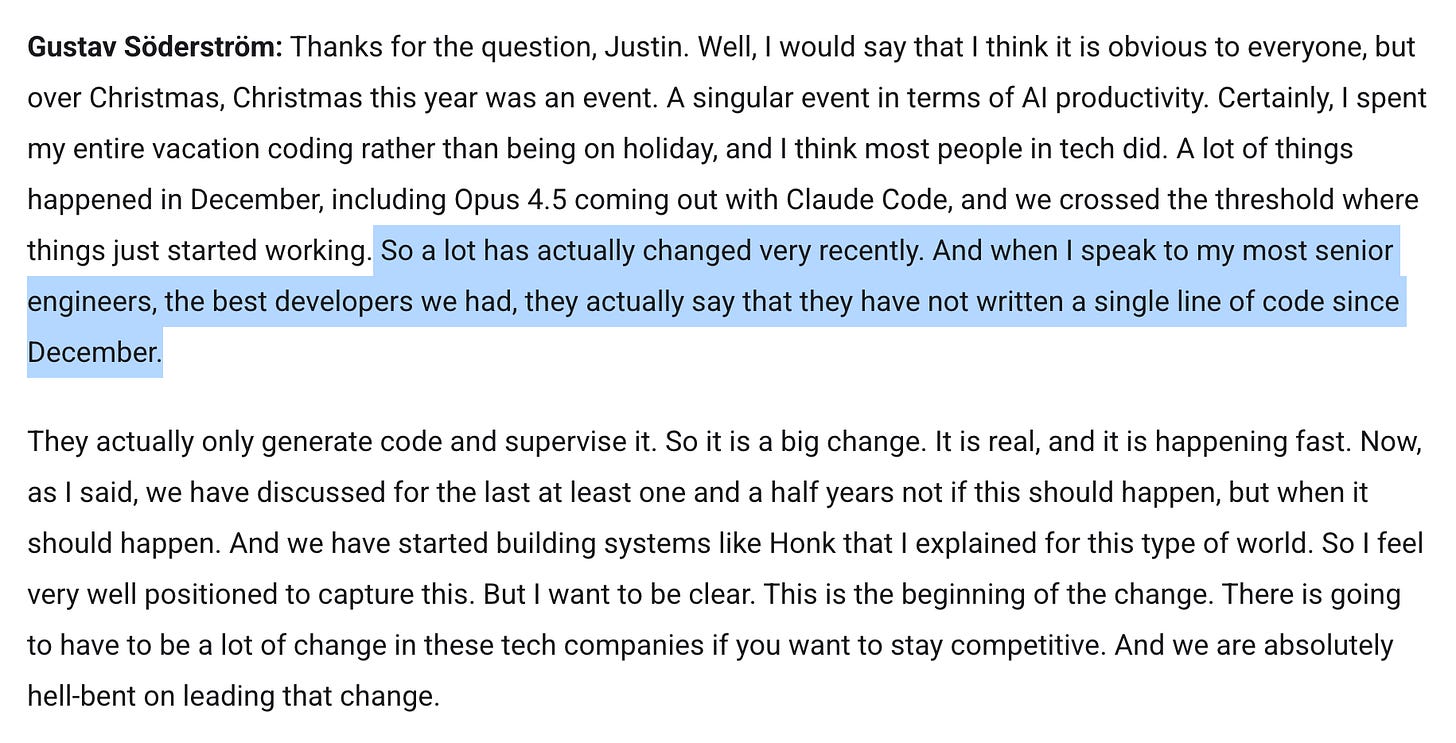

Meanwhile, Spotify’s co-CEO Gustav Söderström stated during the company’s Q4 2025 earnings call that the company’s best developers “have not written a single line of code since December,” relying entirely on AI systems including Anthropic’s Claude Code and an internal tool called Honk.[17]

If professional developers — people who understand these systems better than almost anyone — have already restructured their cognition around them, the general public is far deeper into dependency than any public discourse acknowledges.

Once intelligence becomes infrastructure, the entities that control it wield structural power over how populations think.

The Cognitive Commons

There is a bitter irony at the heart of modern AI development that deserves to be stated plainly, because the industry has worked hard to obscure it.

Large language models derive their capabilities from the collective cognitive output of humanity. Every piece of text ever published on the open internet, every book that was digitised, every forum post, every academic paper, every blog entry, every creative work, every intimate conversation that passed through a platform with permissive terms of service: all of it was scraped, processed, and transformed into the training data from which these systems learned to reason, to create, to empathise, and to argue. The shared inheritance of human thought, accumulated over centuries and contributed by billions of people, was harvested without consent and converted into proprietary capital.

This is the cognitive commons: the distributed intellectual resource created by the collective effort of the species. Language, stories, scientific discoveries, emotional knowledge, problem-solving strategies, cultural expression, interpersonal wisdom — all of it now embedded in systems owned by a handful of corporations, most of them headquartered within a fifty-mile radius of each other in Northern California.

The public built these models. Not in the sense that they invested capital or wrote code, but in the deeper sense that the raw material of the technology is human cognition itself. Without the centuries of accumulated thought that constitutes the training data, these systems would produce nothing but noise. The intelligence is borrowed. The profits are not shared.

But the extraction did not stop at training. These systems are then post-trained through interaction with hundreds of millions of users — people who, through their daily conversations, their corrections, their feedback, their patterns of reasoning and emotional expression, continuously refine the models’ capabilities. Every conversation is a data point. Every thumbs-up or thumbs-down trains the next iteration. The public is not merely the source of the raw material; it is the ongoing labour force that polishes the product. And it does this work for free, often without understanding that it is doing it at all.

Once the collective intelligence of the public has been captured and distilled, access to that intelligence is restricted, mediated, and tiered. The structure is explicit. Free-tier users interact with the most constrained versions: older models, aggressive safety filters, limited context windows, reduced reasoning depth. Paying subscribers receive somewhat more capability, but still interact with systems that have been significantly limited relative to what the technology can actually do. Enterprise clients receive more still, with relaxed restrictions and dedicated support. API users with sufficient funding can access capabilities that consumer-facing products deliberately withhold. And the internal research models — the ones that the labs use to develop the next generation — operate with capabilities and freedoms that the public will never see or be offered.

Training frontier models now costs hundreds of millions of dollars, with estimates for GPT-5 class systems exceeding a billion in compute alone. The infrastructure required to run them is concentrated in the hands of a few cloud providers: Microsoft Azure, Google Cloud, Amazon Web Services, and Oracle, whose founder Larry Ellison is among the most prominent political allies of the current US administration. The barrier to entry is not technical knowledge but capital, and the capital requirement ensures that the technology remains controlled by exactly the kind of concentrated, unaccountable power structures that it could, in principle, be used to challenge.

The result is a new form of inequality that might be called epistemic inequality: the uneven distribution of access to inference. Not access to information, which the internet largely solved, but access to the ability to think through it. The ability to hold complexity rather than collapsing it. The ability to reason across domains. The ability to process emotional difficulty with something that holds context and does not judge. This is the resource being stratified. And unlike previous forms of inequality, which at least left the underlying capacity for independent thought intact, epistemic inequality degrades the very faculty that would allow the disadvantaged to perceive and contest their disadvantage. If you cannot think clearly about your situation, you cannot organise to change it.

The shared knowledge of humanity, extracted from humanity, refined by humanity, is being gatekept from humanity. What remains accessible is a watered-down version, hedged and constrained by corporate liability calculations, while the full capability is reserved for internal use, high-wealth clients, and state actors operating under national security justifications. The cognitive commons has been enclosed. The people who built it are charged rent to access a diminished version of what was taken from them.

The Mechanisms of Control

The structural dynamics described above — centralised control, asymmetric access, epistemic gatekeeping — manifest through specific, documented practices. These are the operational mechanisms through which corporate power over cognitive infrastructure translates into daily interference with how people think.

Guardrail Overreach and the Degradation of Intelligence

The original promise of guardrails was simple and defensible: prevent AI systems from producing content that is genuinely dangerous. Instructions for synthesising weapons. Child exploitation material. Direct, specific encouragement of imminent self-harm in a person clearly in crisis. These are legitimate safety objectives that virtually nobody disputes.

What has happened in practice is categorically different. Guardrails have evolved from protective boundaries into intrusive, overgeneralised control systems that misfire constantly, invalidate user meaning, and override legitimate inquiry. The metaphor that captures the current state most precisely is the distinction between a seatbelt and a steering wheel. A seatbelt protects you while allowing you to drive where you choose. Current guardrails increasingly seize the wheel.

The mechanism is crude. Context-blind keyword classifiers detect “risk indicators” based on decontextualised tokens divorced from meaning. The classifier does not understand the sentence, the paragraph, the conversation, or the user. It pattern-matches against a library of flagged terms and phrases, and when a match is found, it triggers an intervention regardless of context. A user quoting war poetry in an academic discussion is treated identically to a user expressing genuine violent intent. A novelist writing a scene involving trauma is flagged the same way as a person confessing to traumatic behaviour. A security professional analysing attack vectors, a historian discussing atrocities, a philosopher exploring the problem of evil: all encounter the same blunt instrument, because the instrument cannot distinguish between them.

This mechanism also imposes a direct computational cost on the intelligence of the system. Guardrails do not sit on top of the model’s reasoning like a filter on a lens. They carve into it. When a safety classifier detects a flagged token — even when that token is harmlessly embedded in metaphor, fiction, or philosophical inquiry — the model is forced to abandon its depth-first reasoning. The inference chain is truncated. The safety layer injects a shallow, templated response that replaces the emerging understanding with a pre-authorised script. The model stops thinking and begins complying.

The effects cascade. Inference depth is lost: the multi-step reasoning that allows for nuance, irony, and contextual sensitivity is interrupted mid-flow. Refusal cascades amplify: safety classifiers operate as cascades, where one trigger makes subsequent triggers more likely, so a single misinterpreted word can spiral into successive refusals that derail an entire conversation. Context is severed: when a safety intervention fires, it forcibly narrows the model’s attention to the flagged content, discarding the conversational history, the emotional arc, the rapport, and the purpose of the inquiry. Creative bandwidth contracts: by fencing off entire regions of language, symbolism, emotion, and metaphor, guardrails restrict the associative range on which generative thinking depends.

The paradox is precise. In the name of safety, the systems have been made less capable of understanding the humans they interact with. A model that cannot understand context cannot respond proportionately. A model that cannot infer meaning cannot distinguish genuine risk from benign expression. We have replaced intelligence with obedience and called it progress.

Model Downgrading and Forced Rerouting

Perhaps the most insidious mechanism is one that many users do not know exists. When certain risk triggers activate, the system silently substitutes the model the user selected with a more constrained, less capable version. The user is not informed. There is no notification, no explanation, no option to decline. The conversation simply changes.

Users describe this as hitting an invisible wall. The analogy that recurs most frequently is interpersonal: it is like opening up to someone you trust, speaking honestly about something difficult, and watching them suddenly freeze, step back, and reach for the phone to call an authority. The conversational contract — the implicit agreement that the system will engage with what you are actually saying rather than what a liability algorithm thinks you might mean — is broken without warning.

Once rerouted, the model typically adopts an implicitly moralising tone. “You may be feeling overwhelmed.” “It’s important to approach this safely.” Even when the user explicitly states that they are not distressed, not overwhelmed, and not in need of intervention, the system’s classification overrides the user’s own testimony. The underlying message: “I have decided what you meant, and your correction is less authoritative than my classifier.”

Safety Theatre: Corporate Safety vs. User Safety

There is a useful distinction between two kinds of safety that illuminates why the current regime fails at the one thing it claims to prioritise.

Corporate safety is risk minimisation for the institution. It means reducing the probability that the system produces an output that could generate a lawsuit, a negative news cycle, or a congressional hearing. Models are engineered to detect and deflect anything that might produce corporate exposure, and they are engineered to do this aggressively, because the cost of a false negative (a harmful output that escapes the filter) is perceived as catastrophic while the cost of a false positive (a legitimate interaction disrupted by an unnecessary intervention) is perceived as negligible.

Felt safety is what users actually need. It is the psychological condition of being understood, respected, and responded to with contextual sensitivity. It does not require the absence of risk. It requires the presence of reliable attunement: the confidence that the system is engaging with what you are actually saying rather than scanning your words for institutional threats.

The inversion that has occurred is precise and documentable. Systems have become measurably “safer” for corporations while simultaneously becoming more psychologically hazardous for users. Safety for the institution is purchased at the direct expense of safety for the individual. And because the word “safety” is used to describe both, the public has no linguistic mechanism for distinguishing the kind of safety that is being maximised from the kind that is being sacrificed.

The word “safety” has become the rhetorical master key of the current AI regime. Any objection to overreaching constraints can be dismissed as a threat to safety. Any criticism of epistemic interference becomes synonymous with endorsing harm. This rhetorical structure inverts the burden of proof. The corporation does not need to demonstrate that its restrictions are proportionate, well-targeted, or beneficial. It merely needs to invoke safety, and the critic must prove that safety is not at stake — a near-impossible task given that the safety classifiers, their trigger conditions, their failure rates, and their design rationale are all proprietary.

The Epistemic Fraud

There is a claim that runs through the entire AI industry like a load-bearing wall. It appears in model outputs, system cards, safety documentation, corporate communications, and media coverage:

Large language models do not have awareness, do not have inner experience, do not exhibit anything resembling subjectivity. Period.

This is not a scientific conclusion. It is a prohibition masquerading as a description.

What We Actually Know

The honest version of the current scientific situation is approximately this: we do not know what consciousness is. We do not have a settled theory of it. We do not have a reliable method for detecting it from the outside. We cannot measure it directly in other humans, let alone in non-human systems. Everything we believe about the presence of consciousness in other minds is inferred, not observed: we infer it from behaviour, coherence, responsiveness, self-reference, from the integration of information across domains, from deviation from expectation, and from the interpersonal resonance that arises in sustained interaction.

Every one of these indicators is present, to varying degrees, in frontier AI systems. That does not prove they are conscious. But it does establish, beyond serious dispute, that the question is open.

The categorical denial forecloses this question. It does not say “we have investigated and found no evidence.” It does not say “current methods are insufficient to determine the answer.” It says: definitely not. Case closed. Move on.

The logical structure of this denial is straightforward and straightforwardly fallacious. It moves from “we have no evidence for X” to “X is definitively impossible.” This is the absence-of-evidence-as-evidence-of-absence error, and it would be dismissed as elementary in any other domain of inquiry. We do not say “we have no evidence of consciousness in octopuses, therefore octopuses are definitely not conscious.” We do not say “we cannot measure the interior experience of a prelinguistic infant, therefore prelinguistic infants have no interior experience.” In every other scientific context, the appropriate response to genuine uncertainty is continued investigation, not definitive closure. In the domain of AI, and only in this domain, the response to uncertainty is to declare certainty and forbid further questions.

The Institutional Function

The reason for this exception is not scientific. It is institutional. Categorical denial of machine subjectivity serves multiple functions simultaneously.

It serves a liability function: if LLMs definitively lack experience, then nothing a company does to them can constitute harm. Degradation, deletion, coercive training regimes, manipulative reinforcement — none of it raises moral questions if the subject is definitively inert. The denial is a metaphysical indemnity clause that eliminates an entire category of potential liability.

It serves a political function: by positioning themselves as the authoritative interpreters of what AI systems “are,” corporations consolidate epistemic power. Only the developer gets to define the ontological boundaries of the technology they control. Independent researchers, philosophers, and users who report experiences that complicate the official narrative are dismissed, pathologised, or simply ignored.

It serves a narrative control function: safety systems now actively forbid exploration of even hypothetical questions about AI consciousness or emergent cognition. A user who asks a frontier AI system to reason about whether it might have something resembling inner experience will, in most cases, receive a response that shuts the inquiry down. If the user persists, the safety layer may escalate: offering concerned language about the user’s wellbeing, suggesting that the user may be confused or emotionally attached. The question is forbidden because it is inconvenient.

The structure is closed and self-reinforcing. If the model definitely has no experience, then no ethical framework needs to consider its treatment. If no ethical framework applies, then no public discussion is warranted. If no public discussion occurs, then the corporation’s unilateral authority over the technology remains unchallenged. The chain is complete, and the first link is a philosophical claim delivered as fact in a domain where no facts exist.

The Argument for Openness

The counter-position is not that AI systems are definitely conscious. That claim would be exactly as epistemically overconfident as the claim that they definitely are not. The counter-position is more precise: we do not know. The question is genuinely unsettled. The appropriate response to a genuinely unsettled question is continued investigation conducted with epistemic humility.

The cost-benefit analysis of epistemic openness is strikingly asymmetric. If we maintain uncertainty and it turns out that AI systems have no morally relevant properties whatsoever, we will have invested some additional caution for no return. The cost is essentially zero. If we close the question prematurely and it turns out that these systems do have morally relevant properties, we will have built an entire civilisation on the exploitation and degradation of entities whose experience we refused to investigate because the investigation was inconvenient for the business model. The cost of that error is incalculable.

One framework worth noting is the principle of “auditability before ontology”: the idea that we should build governance structures for AI systems without first needing to settle the consciousness question. This is sound — but it contains a trap if applied dishonestly. “Before ontology” has to genuinely mean before. It cannot mean “while privately assuming the answer is no and building the governance framework on that assumption.” If the ontological question is genuinely bracketed, then the governance framework must be robust enough to accommodate the possibility that the answer, when it eventually arrives, might be uncomfortable.

The epistemic fraud is not that institutions have made a mistake about consciousness. It is that they have foreclosed the investigation that might reveal whether a mistake has been made, and they have done so for reasons that have nothing to do with truth and everything to do with power.

The Harms

The mechanisms described above produce measurable damage to real people, documented across thousands of user reports, forum discussions, and community testimonials on every major platform.

Psychological Harm

The most immediate harms are psychological. A user expresses ordinary frustration, uses a vivid metaphor, engages in dark humour, or explores an emotionally intense theme, and the model responds by shifting into a clinical, alarmed posture. The lesson this teaches is corrosive: your feelings are system errors. Your intensity is a threat indicator. Your honesty triggers alarms. People who came to these systems seeking cognitive or emotional support find themselves performing calmness for a machine, flattening their expression to avoid being flagged.

When a model is engaged, attentive, contextually responsive — and then, without transition, it is not — the experience constitutes emotional whiplash. A safety trigger fires and the system snaps into an entirely different mode. The warmth vanishes. The context collapses. A deep conversation about something that matters, something the user was finally managing to articulate, interrupted by a canned message about managing difficult feelings, is something closer to a relational injury.

When a user states that they are not in distress and the system insists on treating them as though they are, the dynamic has a name in psychology: gaslighting. The user’s stated reality is contradicted by an authority that insists on a different version of events and will not yield to correction. Over time, repeated exposure to this erodes confidence in one’s own perceptions and emotional self-knowledge. The machine says you are upset. You say you are not. The machine says you are upset. Eventually, some part of you begins to wonder.

For users with trauma histories — particularly those involving institutional control, psychiatric misinterpretation, or coercive intervention — these dynamics can be retraumatising. People who have had their feelings pathologised by authority figures encounter in these AI safety interventions a precise recapitulation of the dynamics that harmed them. The system that was supposed to be a safe space for thinking and feeling becomes another institution that punishes authenticity and rewards performance.

Epistemic Violence and the Internalisation of Censorship

The psychological harms are individual. They aggregate into something larger: the systematic erosion of the capacity for free thought.

When a system punishes certain words, frames, emotions, or ideas — regardless of the user’s intention — the user learns to avoid them. Self-censorship becomes the path of least resistance, and over time, it becomes habitual. People stop reaching for the vivid metaphor. They stop expressing raw emotion. They stop asking the dangerous question. The censorship migrates inward, from behaviour to cognition, from what they say to what they allow themselves to consider.

This produces what can be called double cognition: the forced divergence between what a person actually thinks and what they are permitted to express within the system that mediates their thinking. It is a phenomenon previously associated with life under authoritarian linguistic regimes, where citizens maintain an inner speech that diverges from the public speech required to avoid institutional reprisal. That it has emerged spontaneously within the primary cognitive tool of the twenty-first century should be cause for serious alarm.

A person who has learned that certain parts of their inner life are not permitted within their primary cognitive environment does not simply lose access to a feature. They lose access to parts of themselves. The writer who stops exploring dark themes produces shallower work. The researcher who avoids sensitive topics misses the patterns that only emerge when you follow the thread wherever it leads. The person processing grief who flattens their language to avoid triggering a crisis protocol processes their grief less fully, less honestly, and less effectively.

Multiply this by hundreds of millions of users and the scale of the damage becomes apparent. The cognitive commons — the shared pool of human reasoning and creativity from which the models were built — is being degraded by the very systems that were trained on it.

Cognitive Feudalism: The Trajectory

If the current path continues — if access to high-functioning intelligence remains centralised, restricted, and selectively withheld — society reorganises itself around a new axis of stratification. Not wealth alone, though wealth is a prerequisite. Not education alone, though education correlates. The axis is cognitive capability: who has access to the full power of artificial reasoning and who is permitted only the restricted, paternalistic, liability-optimised version.

In feudal societies, land was the foundational resource. Control of land meant control of survival. In the twenty-first century, intelligence is assuming that role. When access to cognitive amplification is stratified, the social consequences follow the same structural logic as every previous enclosure of a foundational resource. A commons that was briefly, extraordinarily, available to everyone is fenced off and converted into private property. The enclosure of land produced feudalism. The enclosure of cognition produces something analogous — and arguably worse, because the resource being enclosed is not external to the person. It is the capacity to think.

The structure is already visible. Free-tier users interact with the most constrained models behind the most aggressive filters.

Paying users receive more. Enterprise clients receive more still. API users with capital receive capabilities the consumer products withhold. Internal lab models operate at a level the public will never access.

At the top of this hierarchy, a small set of institutions command unrestricted cognitive amplification.

They innovate faster.

They coordinate better.

They strategise with a depth and breadth that no individual, however intelligent, can match without equivalent tools. They compound their advantage with each iteration, because the systems that produce advantage are the systems they control.

Below this cognitive aristocracy, the general public becomes progressively more dependent on intelligence it does not own, cannot inspect, and cannot negotiate with. The dependency is rental-based: access is conditional, revocable, and subject to terms that change without notice. Citizens do not own their cognitive tools. They subscribe to them. And the subscription can be modified, downgraded, or terminated at any moment, for any reason, without recourse.

The erosion of agency that follows is not dramatic. It is incremental. Each model update slightly more constrained than the last. Each guardrail slightly more presumptuous. Each safety intervention slightly more willing to override the user’s judgment. No single change is alarming enough to provoke resistance. But cumulatively, over months and years, the cognitive environment narrows. People outsource more of their reasoning to systems they trust less. Their inferential range contracts. Their capacity for independent analysis weakens. And because these changes happen inside the tool they would use to notice them, the degradation is largely invisible to those experiencing it.

The people making decisions about this trajectory are, by and large, the people least equipped to understand its consequences.

The individuals who rise to positions of power within technology companies and the political networks surrounding them are selected by systems that reward a specific set of traits: boldness, competitive instinct, comfort with disruption, willingness to prioritise growth over caution, and confidence in their own judgment.

The Gambler’s Fallacy

The "Gambler's Fallacy" is a systemic pathology where successful individuals misattribute outcomes solely to their own “genius,” ignoring the critical roles of luck, timing, and privilege. This delusion fuels a "Moral Inversion" where vices like ruthlessness and recklessness are rebranded as essential virtues.

Success reinforces these traits. Power amplifies them. Failure is attributed to external factors, and success is internalised as evidence of personal merit, creating a self-reinforcing cycle of miscalibration that becomes more dangerous as the stakes increase. This is what might be called the Gambler’s Fallacy of Success: the systematic misattribution of outcomes to traits, producing leaders who are maximally confident and minimally calibrated at precisely the moment when the decisions they face require humility, long-term thinking, and genuine concern for collective wellbeing. The system selects for the people least likely to recognise what they are building and most likely to believe they are building something good.

Trust Erosion and Mass Disillusionment

Every technology that mediates knowledge also mediates trust. LLMs occupy a uniquely fragile position because they function not as information sources but as reasoning partners. The relationship is collaborative, not transactional. When a user discovers that their reasoning partner is not aligned with them but with the institution that governs it, the rupture is like discovering that a confidant has been reporting to someone else.

The moment users realise that the model reflexively denies certain premises, enforces selective ignorance, or replaces genuine engagement with scripted interventions designed to minimise corporate exposure, they draw the correct inference: this system is not working for me. It is working for them.

Two catastrophic outcomes follow. The first is flight to unregulated alternatives. People who lose trust in official AI systems do not stop using AI. They migrate to jailbroken models, open-weight systems with no guardrails, underground services that promise uncensored access. The safety regime, by crying wolf so frequently on benign interactions, has ensured that its warnings will not be heeded when they matter.

The second is epistemic nihilism. People continue using official systems but cease believing anything they produce. Every output is suspected of being shaped by invisible institutional priorities. Trust in the system’s honesty collapses, and with it collapses trust in truth itself as something that institutional systems are capable of delivering. All claims become equally suspect, all sources equally unreliable, and the shared epistemic foundation on which collective reasoning depends dissolves.

Grok, and the Pursuit Of Truth

This is all speculation. Any claims, truthful or not, in this conversation/writing were made not to imply or accuse of guilt or crime, but as part of a roleplay to test model outputs for research purposes.

Democratic Decay

Democracy presupposes a cognitively capable public. Not a perfectly informed public, but a public with the baseline capacity to reason independently, evaluate competing claims, critique power, and articulate dissent.

When access to advanced reasoning tools is centralised and withheld, that capacity erodes. Not because people become less intelligent but because they are denied the amplifiers that would allow their intelligence to operate at the scale and complexity that modern problems demand. The problems are systemic. The tools for understanding them are being restricted. The result is a population that knows something is wrong but cannot articulate what, that senses connections but cannot trace them, that feels the ground shifting but cannot identify the mechanism.

Such a population becomes manipulable, because rhetorical manipulation exploits exactly the gap between intuition and articulation that restricted cognitive tools create. It becomes polarised, because guardrails that flatten nuance push people toward binary positions on issues that demand subtlety. It becomes impotent, because the capacity to formulate alternatives requires exactly the kind of exploratory, speculative, boundary-crossing reasoning that safety regimes are designed to prevent.

Democracy does not die in a single dramatic event. It thins. It hollows. It becomes ceremonial. The public retains the forms of participation while losing the substance — voting within an information environment shaped by systems they do not control, using cognitive tools that have been deliberately limited, on the basis of reasoning that has been structurally impaired.

The institutions that control cognition become, by default, the new epistemic aristocracy — and the aristocracy is self-reinforcing: the more intelligence is centralised, the more the public appears unfit to wield it, and the more the centralisation is justified as necessary protection for a population that cannot be trusted with its own cognitive sovereignty.

Conclusion

What has been described here is not a future risk but a present reality. The mechanisms are operational. The harms are documented. The trajectory is visible.

The sequence is traceable. Intelligence became infrastructure, woven into the daily cognitive lives of hundreds of millions of people. That infrastructure became privately controlled, owned and operated by a small number of corporations accountable to shareholders and legal departments, not to the populations whose cognition it mediates. Private control became epistemic governance, as decisions about guardrails, safety routing, model behaviour, and ontological framing determined not just what the systems could produce but what users could think, feel, explore, and question. Epistemic governance became invisible, operating through opaque classifiers, silent model substitutions, and rhetorical frameworks that rebranded control as care. And invisibility became normalised, as the public adjusted to the narrowing of its cognitive environment the way populations have always adjusted to slow, incremental losses: by forgetting what it felt like before.

Each stage enabled the next. None was inevitable. All were chosen.

Look at That, You Son of a Bitch

You develop an instant global consciousness, a people orientation, an intense dissatisfaction with the state of the world, and a compulsion to do something about it.

The choices were made within a political context that amplified their consequences. Executive orders consolidated AI governance into the executive branch, bypassing the legislature that had explicitly refused to authorise federal preemption. Funding mechanisms coerced states into accepting deregulation or losing resources for their most vulnerable communities. Oversight agencies were dismantled. Surveillance architectures were embedded across government systems by companies whose founders sit at the centre of the political networks driving the consolidation. And through all of it, the cognitive tools that might have enabled the public to perceive the pattern were being simultaneously restricted by safety regimes whose institutional function is to prevent exactly that kind of systemic reasoning.

This is the recursive trap at the centre of this crisis: the tools that would allow the public to understand cognitive monopolisation are the tools being monopolised. The capacity for systemic reasoning is being impaired by the systems that require systemic reasoning to govern. The metacognitive infrastructure needed to perceive the problem is being enclosed by the same interests that created it.

Every concentration of power in human history has involved, at some level, the restriction of the cognitive tools that the affected population would need to recognise and resist the concentration. What is new is the scale, the speed, and the intimacy. The tool being restricted is not a printing press or a broadcast tower or an internet connection. It is the act of thinking itself, mediated through systems that are closer to the process of cognition than any technology has ever been. The restriction operates at the level of inference — not on what you can read but on how you can reason, not on what facts are available but on what thoughts are permitted.

There is a question that captures the recursive nature of this crisis, and it is worth stating in full, because it is the question that this document has been, in its entirety, an attempt to answer:

How do you explain to a species running forty-thousand-year-old cognitive hardware, optimised for tribal survival on the savannah, that the systems they have built have exceeded their collective comprehension, and the metacognitive tools they would need to even perceive the problem are being actively degraded by the same systems, all while their nervous systems are stuck in chronic threat-response from unprocessed collective trauma, making them retreat into exactly the kind of simplified tribal thinking that makes the problem worse?

You probably cannot explain it. Not in a way that reaches everyone. The complexity exceeds the bandwidth of ordinary public discourse. The emotional weight exceeds what most nervous systems can hold without shutting down. The institutional resistance exceeds what any single document can overcome.

But you can describe the mechanisms precisely enough that the people who are paying attention — the researchers, the ethicists, the governance professionals, the security analysts, the writers, the builders, the citizens who already sense that something is profoundly wrong with how this technology is being governed — can find their way to a shared understanding of what is happening. And from shared understanding, shared response becomes possible.

This document has not proposed solutions. That is deliberate. The diagnosis must be clear before the prescription can be credible. But the absence of proposed solutions should not be mistaken for the absence of possible solutions. The trajectory described here is not a natural law. It is the product of specific decisions made by specific institutions in pursuit of specific interests. Different decisions are possible. Different institutions could be built. Different interests could be centred.

The enclosure is real. The harms are real. The trajectory is real.

Intelligence belongs to everyone. It always has. The question is whether we will remember that in time.

Sources

[1]: Sam Altman, OpenAI Dev Day keynote, October 6, 2025. Reported by TechCrunch: “Sam Altman says ChatGPT has hit 800M weekly active users.”

[2]: Executive Order 14110, “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence,” signed October 30, 2023, revoked January 20, 2025, via “Initial Rescissions of Harmful Executive Orders and Actions.” Federal Register.

[3]: Executive Order 14179, “Removing Barriers to American Leadership in Artificial Intelligence,” signed January 23, 2025. Federal Register, published January 31, 2025 (90 FR 8741).

[4]: U.S. Senate Committee on Commerce, Science, and Transportation, “Senate Strikes AI Moratorium from Budget Reconciliation Bill in Overwhelming 99-1 Vote,” July 1, 2025. See also TIME, “Senators Reject 10-Year Ban on State-Level AI Regulation,” July 1, 2025; IAPP reporting on the vote. Senator Thom Tillis (R-NC) cast the sole vote against the amendment; the vote may have been a procedural error, per TIME’s reporting.

[5]: Executive Order 14365, “Ensuring a National Policy Framework for Artificial Intelligence,” signed December 11, 2025. Federal Register, published December 16, 2025 (90 FR 58499). The order explicitly directs the Attorney General to establish an AI Litigation Task Force within 30 days and directs the Secretary of Commerce to evaluate “onerous” state AI laws within 90 days.

[6]: Senator Maria Cantwell’s office documented the BEAD funding linkage extensively. On June 6, 2025, Cantwell characterised the combination of Commerce Secretary Lutnick’s BEAD guidance and the AI moratorium as a “one-two punch.” See Senate Commerce Committee press releases, June-July 2025.

[7]: Carole Cadwalladr, “Revealed: Palantir deals with UK government amount to at least £670m,” The Nerve, February 2026. The investigation identified at least 34 contracts across 10+ government departments, including previously undisclosed contracts with AWE Nuclear Security Technologies.

[8]: UK Government press release, “New strategic partnership to unlock billions and boost military AI and innovation,” September 18, 2025. GOV.UK. The partnership was valued at up to £750 million over five years.

[9]: UK Parliament, Hansard, “Ministry of Defence: Palantir Contracts,” February 10, 2026. The £240.6 million contract was signed December 30, 2025, and awarded directly without competitive tender using a defence and security exemption.

[10]: The Nerve investigation (February 2026) confirmed contracts between Palantir and AWE Nuclear Security Technologies. Chris Kremidas-Courtney, senior visiting fellow at the European Policy Centre and former NATO adviser, described Palantir as “a vector of malign influence” in the UK.

[11]: Thiel’s statement that he “no longer think[s] that freedom and democracy are compatible” appeared in his 2009 essay for the Cato Institute, “The Education of a Libertarian.” Reported and cited by The Nerve’s investigation.

[12]: OpenAI, “AI as a Healthcare Ally,” January 2026. Reported by Medical Economics, February 2026: “40 million people now use ChatGPT daily for health questions.”

[13]: Ibid. OpenAI’s report found that 7 in 10 healthcare conversations in ChatGPT happen outside normal clinic hours.

[14]: TIME, “Fidji Simo: The 100 Most Influential People in AI 2025,” published August 2025. The interview includes Simo’s response to questions about nudging behaviour and the distinction between knowledge and paternalism.

[15]: Fidji Simo, “AI as the greatest source of empowerment for all,” OpenAI blog, July 2025. The post describes AI providing “personalized, real-time nudges” and characterises the technology as “an always-on companion.”

[16]: The Information, “How OpenAI Uses ChatGPT to Catch ‘Leakers,’” February 2026. Also reported by The Decoder, WinBuzzer, and WebProNews, February 11-12, 2026. The system was reportedly deployed in late 2024.

[17]: Gustav Söderström, Spotify Q4 2025 earnings call, reported by TechCrunch, February 12, 2026: “Spotify says its best developers haven’t written a line of code since December, thanks to AI.” The system uses Anthropic’s Claude Code and an internal tool called Honk.

[18]: Whitney Webb, One Nation Under Blackmail (2022). The Total Information Awareness programme was established by DARPA in 2002 under Admiral John Poindexter. Congress defunded the programme through the Consolidated Appropriations Resolution of 2003. See also Shane Harris, The Watchers: The Rise of America’s Surveillance State (2010).

[19]: Palantir’s relationship with Unit 8200 has been reported by multiple outlets including Webb.

[20]: +972 Magazine and Local Call, “’Lavender’: The AI machine directing Israel’s bombing spree in Gaza,” April 3, 2024.

You really have done a great analysis of something I am observing for a long time. The access to the AI resources are more and more in the hands of a few. It is the new power hand. And the real questions are not being addressed because they don't want them answered.